|

|

|

|

The goal of this assignment is to write our own

software for the Pioneer 1 robot that will allow it to

autonomously navigate the Libra Complex, locate soda cans, and

bring them to a designated drop off point.

| |

|

|

|

|

Proposal

The Use of Subsumption Architecture to Program

a Pioneer 1 Robot to Autonomously Locate and Retrieve Soda

Cans Located in the Libra Complex

Ross Luengen and Seema Patel

Harvey Mudd

College, Claremont, CA

Objective

The objective of this project is to implement

the subsumption based robot control architecture proposed by

Rodney Brooks of MIT on the ActivMedia Pioneer 1 Robot to

complete a soda can retrieval task.

Abstract

Rodney Brooks' subsumption based control

architecture will be implemented in a fairly standard coding

environment to produce an emergent behavior of object

retrieval. The program will run on a laptop using FreeBSD and

communicate via serial connection with an ActivMedia Pioneer 1

robot. A USB camera will be used to gain visual stimuli for

object location. Using an inheritance schema, modular

behaviors will simultaneously run to manage various sensors

depending on the behavior's purpose. Ultimately, the robot

will be able to autonomously avoid all objects while searching

for a soda can, locate and retrieve the can, and then return

to a designated drop zone. The implementation will provide

complete external control of the Pioneer (no pre-built

software) as well as provide a very generic platform for

future applications on other hardware or emergent behavioral

systems.

Introduction

The Subsumption control paradigm will be

implemented for the ActivMedia Pioneer 1 on a FreeBSD 4.2

based laptop in c++. The project is broken into three major

sections. First, the control software will be implemented for

the Pioneer 1 via a serial connection. This includes

implementing two-way serial communications for sensor data and

control commands. Secondly, a behavioral system will be

wrapped around the control code. This system will control the

hold and release mechanisms discussed in Brooks' paper.

Thirdly, actual behaviors will be developed for the following

desired effects; battery power saver, avoid obstacles/walls,

reflexive grasp mechanism, collision handling, wander/explore,

soda vision identification, and return home. The combination

should result in highly a complex behavior capable of adapting

to new and unknown environments, and should be an interesting

exploration into the field of robot control paradigms.

This solution provides many different

intriguing aspects of robotics. We hope to construct a very

high level behavior from fairly simple programming.

Hierarchical robotic design requires huge assumptions

regarding the environment to allow for proper robotic

planning. We hope to evolve a system that his highly adaptive,

both for our project and something that is highly useful and

malleable for future robotic applications. Much of the

programming structure we are striving for is independent of

robotic platform or behavior objectives. Our behaviors will

serve as a proof of concept, showing the usefulness and

modularity provided by our programming scheme.

If properly implemented, our project has many

practical applications both in our software schema and in the

real world. A common use for such highly autonomous mobile

robotics is the retrieval of objects in locations too

dangerous and too difficult for humans to travel. This

includes space, toxic and nuclear hot spots, caves and

crevasses, collapsed buildings, or any number of other places.

In its conception, our control code is modular enough that our

robot will be capable of recognizing any number of objectives,

and will not limited to soda cans. Any number of smaller

behaviors can be modified, added, or removed to result in

different emergent behavior. In addition, our code will be

usable by future robotic projects wishing to take advantage of

either the reaction or the hybrid control models.

A discussion of materials needed as well as a

timeline for development will follow an explanation of past

work, related work, goals of the project, and a work plan.

Past Work and Related Work

Rodney Brooks first conceived subsumption

architecture at MIT in the mid 1980's as a reaction to the

difficulties in hierarchical design as a practical technical

solution for implementing behavior patterns. His idea assigns

specific priority levels to behavior mechanics thereby solving

conflicting decisions. If two behaviors come to conflict

regarding a certain movement, one will take priority according

to a pre-determined setting. For example, squirrels exhibit

"obtain food" and "avoid human" behaviors. In Brooks'

architecture, one behavior, "obtain food", would be given

priority. Although the avoid human behavior commands a

squirrel to avoid a human feeding nuts, the obtain food

behavior takes priority, and the squirrel quickly snatches the

food from the human. While this may not be how thinking

entities work, this viewpoint is easily implemented on robotic

machines.

Our project takes on the application of Brooks'

theory in a universal programming environment. An open source,

c++ framework for the subsumption architecture will provide

universal programmability for the community. By simply

replacing the control class, any robot (including a

simulation) can be driven with our behavior code.

Additionally, our architecture makes modifications simple and

highly modular.

Goals of Present Work and Work Plan

Our goals for this project are to write

software that will allow a Pioneer 1 robot to autonomously

navigate through the Libra Complex, locate a soda can,

verbalize the fact that it has located a can, and return the

can to a drop zone. The emergent behavior will be a

combination of many localized behaviors, each of which are

unknowing of the others' existence. These behaviors will be

constructed as small and individual as possible in order to

increase the adaptability of the system and to further expand

Brooks' theoretical architecture.

The robot control architecture is designed to

implement all the operability of the ActivMedia Pioneer 1

robot including the optional gripper package. It will store

state variables according to the on board sensors, thereby

representing current sensor states and not a representation of

the world. Functions will be provided for operability of the

robot. First, the serial connection between the robot and the

computer will be initiated. This will be facilitated by three

commands - two sync commands and an open command as specified

by the ActivMedia manual. Secondly, an interface will be

developed for all command structures present in the pioneer

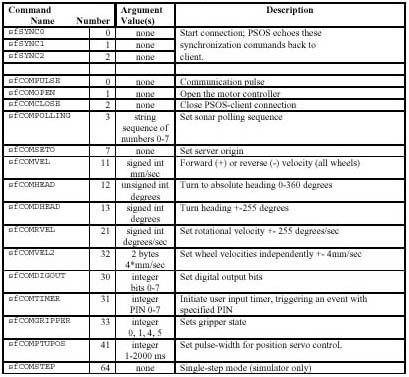

robot. A list of these commands is found in figure 1, while

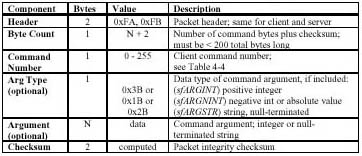

the command structure is found in figure 2.

Secondly, update packets will be received from

the robot itself. These include sensor updates from the robot,

including physical position, wheel velocity, sonar readings,

gripper states, wheel stall status, bump sensors, and other

information. This packet structure is defined in figure 3. The

code operating the packet will be handled in a separate thread

that will consistently query the robot for new data.

Furthermore, this code will send a command to the robot

indicating the attached computer is still alive and wishing

new data.

With the control software written, the project

will move to implementation and integration of behavior code.

Much of this code will be borrowed from the robotics team9s

effort to implement subsumption architecture on its robots.

The code will be debugged and extended for full functionality.

The code will use a behavior manager that decides which

behavior thread needs to be halted (via a semaphore) and which

threads can be allowed to continue at each point and time.

This will also include a behavior class from which extended

behaviors can be implemented. The inheritance from this class

will provide modularity and will facilitate the simple

inclusion of new behaviors into the entire schema.

Finally, behaviors will be implemented to

achieve our goal. These behaviors will range in complexity

from simple collision behaviors that will manage and respond

to the bump sensors, to behaviors that will manage our USB

camera. To avoid battery drain a behavior will monitor battery

usage and warn against complete system failure due to battery

problems. Another system will manage the proximal sonar

readings to make sure any object is not too close. If an

object gets to close to the robot, the behavior will direct

the robot away from the obstacle. Above everything is an

explore or wander behavior which will randomly move the robot

through the environment regardless of obstacles. The explore

behavior will rely on lower behaviors (or more basic

behaviors) to halt its actions before wandering into an

undesirable state.

The last behavior to mention is the vision

behavior, which will use a USB camera to gain visual

environment clues. This behavior is singled out due to the

inherent complexity of vision algorithms on computers.

Hopefully it will be able to locate and recognize objects

within its field of view, and direct the robot's motion toward

desirable objects. With this functionality, we hope to

demonstrate the universality of our system by providing at

least two object recognition models. This way, our robot will

be able to detect and find completely different types of soda

cans, being able to actively distinguish the difference.

Materials

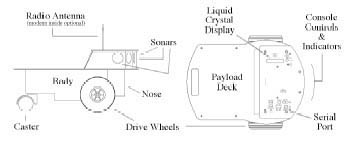

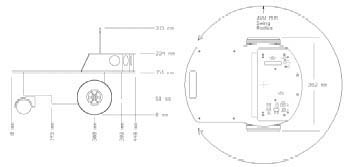

The primary piece of equipment that we will

require is the ActivMedia Pioneer 1 robot with gripper package

(see figures 4-5 for Pioneer schematics). We will also need a

laptop computer to execute our program (see figure 6 for

mounting diagram), a USB camera to obtain visual images of the

robot's surroundings, and a speech software package to allow

the robot to verbalize commands and information back to the

user. For this project we will use Professor Dodds' research

lab's ActivMedia Pioneer 1 robot, the robotics team's Dell

Inspiron 3800 laptop, the robotics team's D-Link DSB-C300 USB

camera, and an open source speech synthesis package called

Festival. All required equipment listed has been obtained.

Timeline

|

February 5, 2001 |

Completed most of the RobotControl

architecture. Finished basic control commands, including

velocity settings and sonar updates. Major lag time in

all robot commands. |

|

February 16, 2001 |

Received DSB-C300 Camera. Operating under

Linux using ov511 driver. |

|

February 17, 2001 |

Speech synthesis software test program

running properly under Linux. |

|

February 22, 2001 |

Complete the sonar processing software.

Finalize explore, avoid walls, and collision behaviors.

Add grasp and battery behaviors. Integrate the speech

software package. |

|

February 19-24, 2001 |

Re-compile laptop kernel for use with

sound and ov511 USB Camera driver. |

|

March 9, 2001 |

Start visual image processing

software. |

|

April 2, 2001 |

Complete visual tracking software.

Integrate remaining behaviors and start debug

phase. |

|

April 30, 2001 |

Complete subsumption architecture code.

Successfully find, move, and deposit items marked by our

vision recognition software. Complete emergent

task. |

References

ActivMedia Pioneer 1

Robotics Manual.

Brooks, Rodney. Achieving Artificial

Intelligence Through Building Robots. AI Memo 899, MIT

Press, 1986.

Figures

Figure 1:

ActivMedia Pioneer 1 Command List

Figure 2:

ActivMedia Pioneer 1 Serial Command Structure

Figure 3:

ActivMedia Pioneer Information Packet Protocol

Figure 4:

ActivMedia Pioneer 1 Robot diagram (without gripper

assembly)

Figure 5:

ActivMedia Pioneer 1 Robot dimensions (without gripper

assembly)

Figure 6:

ActivMedia Pioneer 1 Robot laptop mount configuration (without

gripper assembly)

| |

|

|

|

|

Our Solution

Our solution will be developed over the course

of the spring semester, 2001. We will divide our task into

sub-projects, each of which is due according to the following

schedule:

-

Project 1 - Monday, February 5

-

Project 2 - Monday, February 26

-

Project 3 - Monday, April 2

-

Project 4 - Friday, May 4

| |

|

|

|

|

Project 1

(Ross Luengen and Katie

Ray)

Purpose

The purpose of this project is to bypass the

built-in software and build from scratch the code needed for

relatively simple behaviors.

Objectives

Method

In order to implement the behaviors a large

portion of robot control code needed to be generated. No

Pioneer code was utilized, instead the serial communications

constructs were implemented in c++ on the laptop computer.

Most of the code that was used was taken from the Robotics

team, including a serial communications package and behavior

hierarchy, but a lot of it did not work (and in fact did not

compile). First, this code needed to be brought to operating

order and reasonable integration with each portion. The serial

communications was refined and templated.

The following is a list of brief descriptions

of the functions that were modified/written to control the

robot:

-

Start connection

-

Communication pulse

-

Open the motor controller

-

Set sonar polling sequence

-

Set server origin

-

Forward (+) or reverse (-) velocity

-

Turn to an absolute heading specified

(between 0 and 360)

-

Turn heading +/- 255 degrees

-

Set rotational velocity +/- 255

degrees/sec

-

Set wheel velocities independently +/- 4

mm/sec

-

Set digital output bits

-

Initiate user input timer

-

Set gripper state

-

Set pulse-width for position servo

control

-

Determine x-position and y-position

-

Determine the velocity of each of the

wheels

-

Battery charge

-

Get most recent sonar readings

The behaviors are implemented under the

framework provided by the robotics behavior structure. Each

behavior acts independently in its own thread. Each behavior

can be paused or resumed according to its priority level and

other behaviors' requests.

-

Explore

The explore behavior is ignorant

of sonar sensing data insofar as it's directional value is

concerned. It chooses a random heading to follow within a

certain maximum. After deciding on a heading, it checks to

see how far the sonar readings are in that direction. It

then sets the velocity relative to the distance remaining. A

larger distance, the faster the robot will explore. A

shorter distance, the more careful it will be. The behavior

sleeps for a certain period of time, and then restarts from

the beginning. Explore is not a wall following algorithm,

rather it relies on random movement to successfully cover an

area. It also relies on AvoidObject to repel itself away

from walls.

-

AvoidObject

The AvoidObject behavior is

written to determine how the robot should react to close

objects. It first determines the the nearest and furthest

sonar sensoR reading. If the nearest obstacle is within a

minimal threshold, then thE AvoidObject behavior knows it

needs to intervene and cease all other behaviors. If the

farthest obstacle is still within our minimum allowable

distance, then the robot must turn around (facing a

wall/corner). Otherwise we turn towards the direction of the

sonar that is detecting the farthest distance. Once the

AvoidObject behavior determines that it is safe, it resumes

all other behaviors.

Source

Code:

Summary

The Pioneer came with code for basic motion but

we thought it better to start from the ground level and build

up. All communication was written and conformed to the serial

control spec for the pioneer robot. It now moves forwards,

backwards, can rotate, and the grippers can be operated upon.

The Pioneer will wander randomly according to the Explore

behavior and will try to avoid obstacles based on the

AvoidObject behavior. The gripper opens and closes according

to command as well as moves up and

down. | |

|

|

|

|

Project 2

The Goals

The goals of this project include:

-

Get the sonar processing behavior to work

-

Implement the speech package

-

Set up the USB camera and write a behavior

that will retrieve images

-

Change the navigation from a relative

velocity implementation to a translational velocity

implementation

Our Work

The first goal we tackled was getting the sonar

processing behavior to work. The Pioneer has 7 sonar

transducers (0-6). Previously we would determine a

transducer's value by storing several values in an array and

then averaging them to determine a final value. This caused

some problems due to the fact that the transducers will often

record random bad values. We attempted to solve this problem

by determining the standard deviation of the values in the

array and then throwing out any new values that were not

within the standard deviation. This filtering schema turned

out to be too conservative as legitimate values recorded when

the robot was moving quickly or turning were being thrown out.

Finally we came up with the idea of having two arrays for each

transducer, one that stores the legitimate sonar values and a

"buffer." When a new value is obtained by the transducer the

program checks it against the previously stored value in the

sonar array. If the two values are within a certain threshold

the new value is added to the sonar array. If they are not

within the threshold, the new value is placed in the buffer

array. Then the transducer obtains another sonar value. If

this second sonar value is within the threshold it is added to

the sonar array and the buffer is deleted. If it isn't within

the threshold it's compared to the previously stored value in

the buffer array. If those two values are within a threshold

they are both copied into the sonar array. If they aren't

within a threshold the new value is added to the buffer array.

If the buffer array ever becomes full it is copied into the

sonar array. For example:

say that the threshold has been set to 2, and

that the sonar array for a transducer is the following:

Say that the buffer array is empty:

Now, when compared to the last value in the

sonar array, the next value we get from the transducer can

either be within the threshold or outside the threshold. If

it's within the threshold it's just added to the sonar array.

It it's ouside of the threshold it's added to the buffer

array. So if our next value was 4, we'd have:

Now, one of two things can happen. The next

value can either be within or ouside the sonar threshold. if

the next value is within the sonar threshold, it's copied into

the sonar array and the buffer is deleted. So if the next

value was 6 we'd now have:

However, If the next value was outside the

sonar threshold it would be compared to the last value in the

buffer array (in this case, the 4). If it is within the

threshold in the buffer array, both values are copied into the

sonar array and the buffer array is deleted. So if our next

value was 3, we'd get:

But if our next value isn't within the buffer

threshold, it's just added to the buffer. If the buffer

becomes full (which would happen if successive values continue

to be outside the threshold), the buffer is just copied into

the sonar array. This particular situation tends to happen

when the robot is turning. So if our next value was 1, we'd

have:

I hope that wasn't too confusing.

The next task we worked on was setting up the

USB camera. It turns out that surprise, surprise, Video for

Linux doesn't work under FreeBSD. The easiest solution (we

thought) to this problem was to install Linux on the laptop

and just run Linux. This turned out to be more complicated

than expected (see the Problems Encountered section), but

eventually we got Linux up and running and our vision behavior

retreiving images. The vision basically behavior works by

reading in and displaying frames taken from the camera.

Our next goal was to get the Festival speech

package working. Originally we implemented Festival under

FreeBSD. It worked except that FreeBSD doesn't have an audio

driver so we never actually heard what the program was saying.

Once we got Linux installed and twiddled with some libraries

Festival worked just fine.

Our last task was to change the navigation from

a relative velocity implementation to a translational velocity

implementation. We felt that doing this would result in a less

spastic motion. This appeared to be a relatively easy task

considering that there is a built in command for translational

velocities. After having some problems with command packet

specifications, the translational velocity implementation

appears to work. One the next things we're going to do is test

the translational velocity functions more extensively.

Although it wasn't one of our goals we ended up

mapping out almost all of the bytes in the SIP packet (the

information packet we recieve from the robot). From this we

were able to figure out how to tell when the bump sensors have

been hit. We used this information to develop a collison

behavior. We were also able to determine that the forward

break beam in the gripperdoes not work (due to a hardware

problem) but the back break beam does. We used this

information to make a grasp behavior that controls the

gripper. Now the gripper will pick up objects whenever its

back break beam is tripped.

You can find a copy of our code here

Problems We Encountered

The major problem we encountered was that video

for Linux does not work under FreeBSD. Therefore, in order to

get the video for Linux code working so that we could capture

images, we had to install Linux on the laptop. The big

advantage of installing Linux was that we got the Festival

Speech package working so that you could actually hear what

the program was saying. The big disadvantage was that it took

a week and a half to get Linux on the machine and another half

week to get our code to compile.

The next problem was that we couldn't get the

Festival package to compile under Linux. It turned out that

there were name space issues between Festival, stl, and

string. Once we resolved those issues Festival worked just

fine.

We are still encountering problems with lag

time. We've reduced the lagtime significantly from ~10 seconds

to ~.1 second. This is acceptable for our purposes, but when

we get a large amount of traffic over the serial port we lose

sync. When sync is lost we end up re-obtaining a lag time of

~10 seconds. In order to fix this we have two options: fiddle

around with sleep times and see if we can find some magic

number that works, or include a time variable in robot control

to indicate the last time data was taken. This second solution

seems to be rather ugly and tedious so hopefully we will be

able to make the first solution work.

The next problem we encountered was that our

program kept causing segmentation faults whenever we tried to

run our vision behavior. When we commented out our vision

behavior the program segmantation faulted when we tried to

quit it. It turned out that we were trying to do a string

concatonatinon without enough memory. We fixed the problem by

declaring a new string of larger length.

Evaluation

In terms of meeting our goals we did rather

well. Our laptop now runs under Linux and the USB camera and

the Festival Speech package both work. Our vision behavior

works and our robot's navigation was successfully changed to

translational velocities from relative velocities. Even though

they weren't part of our original goals we were able to

develop collision and grasp behaviors using the information we

found from mapping out nearly all of the bytes in the SIP

packet.

The Pioneer in Action

Here are some pictures of the Pioneer:

|

This is a side view of the Pioneer. The

little gold circles are the sonar devices and the white

thing on the top pointing at the coke can is the USB

camera. For all of these pictures you can click on the

thumbnail to see a larger image. |

|

This is another side view of the Pioneer

but with the laptop mounted on its back. This is how the

Pioneer will be configured when it is running. |

|

|

This is a front view of the Pioneer. You

can see 5 of the 7 sonar devices, as well as the USB

camera. |

|

This is a snapshot from the USB camera

showing a coke can located a couple of feet in front of

the robot. As you can see we will have the camera

configured so that it should be able to see an object

from several feet away, but it won't be able to see the

object when the object is on the floor butted up against

the robot. |

| | |

|

|

|

|

Project 3

The Goals

The goals of this project include:

-

Implement a keyboard interface to the robot

that will allow us to control the direction and velocity of

the robot (for testing purposes)

-

Use the interface to extensively test our set

translational velocity algorithm (this was mentioned as a

future goal in the last project)

-

Get the avoid walls behavior to work

-

Write software that will allow the robot to

identify coke cans and head towards them

Our Work

The first goal we worked on was implementing

keyboard control of the robot's velocity and direction. This

was accomplished by adapating Solaris code written by

Professor Dodds.

The second goal we worked on was using the

keyboard interface to extensively test our set translational

velocity algorithm. Originally, empirical testing led us to

believe that there was a physical maximum velocity of about

511 mm/sec. We set this number as a constant called

MAX_VELOCITY and sent velocity values to the robot by

multiplying MAX_VELOCITY by a positive or negative fraction of

1 and dividing that by 4. (The robot takes in the velocities

as 2 bytes which are then multiplied by 4mm/sec) After the

software for the keyboard control was implemented, testing of

our set velocity algorithm immediately showed a problem.

Although setting foward velocities appeared to work correctly,

anytime we tried to change direction and move backwards the

robot would speed up to MAX_VELOCITY and continue moving

forwards. More testing showed that we were incorrect in our

perception of how the robot accepts velocity values. It turns

out that for each byte, the first bit controls if the

direction is forward (0) or reverse (1). The rest of the bits

control the magnitude of that direction in the following

manner:

Modifying our set velocity algorithms to

conform to the above format seems to have fixed our problems.

Unfortunately, reverse engineering the process to convert the

velocity values sent back by the robot turned out to be more

difficult than we originally thought. Fixing this conversion

problem is one of the first things we want to address in the

next project.

Next we worked on getting our avoid walls

behavior to work. Originally, we thought that the problem

might reside in the sonar. The first thing we wanted to do was

figure out a way to display the sonar data so that we could

see what the robot was "seeing." This was accomplished by

writing a GUI that displays the sonar data for each transducer

as a cone. The size and color of the cones are a function of

sonar magnitude. After using the GUI to test the sonar, we

discovered that there appears to be a delay in the sonar

processing software. More testing leads us to believe that the

origin of this delay may be the massive amount of processing

power that the GUI itself requires. So, although the GUI was a

good idea and looks spiffy, it may have harmed us more than it

helped us. After testing the sonar some more we are pretty

certain that it works and that our problems with the avoid

walls behavior are being caused by the incorrectness of the

velocities that are being returned by the robot.

The last thing we worked on was writing

software that will allow the robot to identify coke cans and

head towards them. The software works by setting color

thresholds and centering the robot on any colors it detects

within the threshold. It appears to be working correctly.

Problems We Encountered

By far the biggest problem we've encountered is

the one we found today - namely that our USB camera has

stopped working. We are not sure what the problem is, but we

think it might have to do with the cable. Until we can get the

camera working (or get a new camera) we won't be able to

progress on the vision aspects of the project.

The next problem we're trying to solve is

converting the velocities sent back by the robot into usable

information. So far the algorithms we've tried have all

failed, and since many behaviors (including avoid walls) needs

to know the wheel velocities that the robot is sending back,

it's crucial that we get this fixed as soon as possible.

Evaluation

Although we don't feel like we got as many

things working in this project as we did in the last one, we

still completed 3 of our 4 goals. We implemented a working

keyboard control of the robot's velocity and direction. We

used the interface to test our set velocity algorithm,

discovered a problem, figured out what was wrong, and fixed

the algortihm. We got the robot to identify coke cans and head

towards them (we'd post pictures or a movie but the camera

isn't working). Even though it wasn't one of our goals we

wrote a pretty spiffy GUI to display the sonar readings. The

only thing we weren't able to do was fix the avoid walls

behavior, but we're fairly certain of where the problem lies.

The only thing we need to do is figure out how to reverse

engineer our set velocity algorithm to convert the velocity

values reported by the robot into usable information. We're

pretty sure once we do that a lot of the problems we've been

having will disappear.

In the next project we'd like to work on

solving our problems with the reported velocities, getting the

camera fixed, and writing firing sequencings for the

sonar. | |

|

|

|

|

Project 4

The Goals

The goals of this project include:

-

Write a neural network to teach the robot how

to avoid walls and wander

-

Get the USB Camera to work

-

Make everything work

Our Work

We decided to bypass the entire problem of

decoding the returned wheel velocities by writing a neural

network that would teach the robot to avoid walls and wander.

The network used back propogation to learn the functional

relationship between sonar readings and desired wheel

velocities. It accomplished this by training off of

approximately 55 possible scenarios. For example, the sonar

actuators on the right side of the robot were reading low, and

the actuators on the left were reading high, the network was

trained to go to the left. The network turned out to be rather

effective at extrapolating solutions to unusual situtations.

For example, wandering around corners was not one of the 55

given scenarios, and yet, on its first try the network

navigated a corner correctly.

We still aren't sure why the USB camera has

been acting up, but if we had to make a guess we'd say it was

the firmware. We're not really sure how to fix this, and since

the camera seems to work more often than not, we just left it

alone.

Integrating everything was one of the most

difficult parts of this project. We were able to get the USB

camera, the behaviors (including the new neural_wander), and

Robot Control all working at the same time. Unfortunately,

Festival conflicted with the Video for Linux so we were not

able to run the speech package in conjuction with everything

else.

One of the things we did that wasn't originally

one of our goals was to write a neural network that learned

the color of the coke can. First a user used cursors and lines

to block off an area inside an image that was "coke." Then the

program took a random 3x3 pixel sample of the image,

determined if it was "coke" (1) or "not coke" (0) and fed

those pixels to the neural network to teach the network what

"coke" was. Unfortunately, the neural network wasn't finished

in time (i.e. it wasn't working effectively enough) to

implement it into this project.

Another thing we worked on was modifying the

bump behavior. Now, instead of just backing up the robot

rotates based on which sonars are registering low values. So

now if the robot hits a wall next to a corner and it's right

side is blocked off, the robot will back up, turn left, and

resume going straight.

Problems We Encountered

The major problem we encountered was avoiding

walls. The neural network works about half of the time in that

the robot doesn't hit any walls. In the cases that the robot

does hit a wall, about half of the time the bump behavior is

effective in moving the robot away from the wall and the rest

of the time the robot gets stuck in a loop, hitting the wall,

backing up, hitting the wall, etc. We think the large majority

of these problems are (again!) stemming from the sonar.

However, we no longer feel that bad values are at fault, but

now we think the problem is bad reflections. Most of the time

when the robot is following the wall the side sonars register

the wall as being there and the robot avoids it. However, if

the robot gets a tad bit off parallel, the side sonar is no

longer perpendicular to the wall. The small change in angle

(~10 degrees) is enough to result in a large

distance-to-the-wall difference which causes the sonar to

think that the wall is no longer there. Consequently, the

robot continues on course and ends up running into the wall.

We're not quite sure how to fix this except to invest in one

of those nice laser range-finders.

Our camera still acts schizophrenic from time

to time, which can be a problem (like when it stops working

right before a presentation...) Again, we're not sure how to

fix this (or if we can fix this).

In general, our vison software is pretty

effective. For example, during pre-frosh weekend one of the

prospective students tried to confuse the Pioneer during a

trial by placing a Dr. Pepper can next to the coke can. The

Pioneer completely ignored the Dr. Pepper can and went

straight towards the coke can. It turned out that the network

did pick up some (~5) pixels on the Dr. Pepper can that were

coke colored, but there were so many more pixels that matched

on the coke can that the Pioneer headed towards it instead.

The problem is flesh tones and wood tones. Flesh tones and

wood tones seem to contain the same R value as the coke can

and as a result the Pioneer loves to run towards doors and

legs. Our solution to this was to aim the camera as low as

possible to the ground.

Evaluation

Well, it's been quite a semester. The one thing

we'd change if we could would be to add a heuristic avoid

walls algorithm - something the neural network could fall back

on. In general we're pretty pleased with our work. We were

able to write (modular!) software from scratch based on

subsumption architecture that produced an emergent behavior of

finding coke cans. By writing a new Robot Control we can

implement our software on any robot, and by adding (or

subtracting) behaviors we can completely change the emergent

behavior.

We're a little bit disappointed that we weren't

able to integrate the GUI or the speech package into our final

product, but these are always things we can try and implement

next year. Also we never got around to writing a "return to

base" algorithm - that might also be something we could work

on next year. I'd also like to come up with a more permanent

solution to the USB and sonar problems (like getting a new

camera and adding IR sensors to the robot). At any rate, we

still have a lot to look forward to next

year. | |

|

|

|

|

Presentation

Our Pioneer project was presented at Harvey

Mudd College's Presentation Days 2001. Here are the slides

from the presentation.

If you're using Netscape (which is awful at

supporting fancy things like CSS and DHTML), you can download

the PowerPoint version of our

presentation slides.

We were also able to obtain several movies of

the pioneer in action.

-

Wall

Hit - This movie shows some of the problems we've had

with the Pioneer hitting walls.

-

Color

Problems - This movie illustrates the problems we've had

with the vision software being confused by flesh tones.

-

Wall

Avoid - This movie shows the Neural Network working and

a wall being avoided.

-

Success!

- This movie shows the Pioneer navigating a corner of the

hallway and picking up a coke can.

| |

|

|

|

|

|

| |